Ever run into this? You're working in OneLake files, but you accidentally forget to sign in, and boom, the file doesn’t sync. 😬

Now imagine this happens after you've made tons of changes to a simple Excel file that holds a mapping structure. No sync. No backup. Changes gone. Damn it!

As an engineer, I can deal with it. But if this happens to a business user trying to update files? I can totally imagine the frustration.

That’s why I recently switched this Excel/CSV file to a SharePoint list. Sounds old-school, I know, but it still works like magic. And best of all, it integrates super fast via the Graph API in Python.

Now I control the update frequency and reload mechanism. The changes are always live, still in Excel format, and I can easily configure a Service Principal to automate integration.

Let’s dive into how this works. 👇

Preparing access

First things first—we need a SharePoint list to pull data from. In my case, I’m using a mapping table to link string fields from a database source to specific codes and categories, which I want to sync to Fabric.

Before we can integrate with the list, we need to set up a Service Principal that has permission to access and download the content. Here's how:

- Create a Service Principal in the Entra ID (Azure Portal).

- Add the required API permissions to the app registration:Make sure to include: Microsoft Graph > Sites.ReadWrite.AllAn admin must grant consent for these permissions.

- Generate a client secret for the Service Principal and copy the value immediately, you won’t be able to retrieve it later.

- Store the credentials securely:Save the Client ID, Tenant ID, and Secret Value in a secure location, preferably in Azure Key Vault.

The last step is to grant the Service Principal access to the SharePoint list. You can follow this link and make sure the permissions are correctly set:

<AppPermissionRequests AllowAppOnlyPolicy="true">

<AppPermissionRequest Scope="http://sharepoint/content/tenant" Right="FullControl" /> </AppPermissionRequests>

Retrieving data

Now that everything’s set up, we can integrate the SharePoint list with Python in a Fabric notebook. First, we retrieve an access token from the Graph API using our stored credentials. We use the MSAL library to acquire the token via the ConfidentialClientApplication.

headers={'Authorization': f'Bearer {self.access_token}'}

Next, we retrieve the Site ID for your SharePoint environment using:

site_url =

f"https://graph.microsoft.com/v1.0/sites/root:/sites/{source_layer}"

site_resp = requests.get(site_url, headers=headers).json()

site_id = site_resp['id']

You’ll need this Site ID to correctly call your SharePoint list using the proper URL. With the site_id and the list name (used as the table name in the URL), you can fetch the data using the function below.

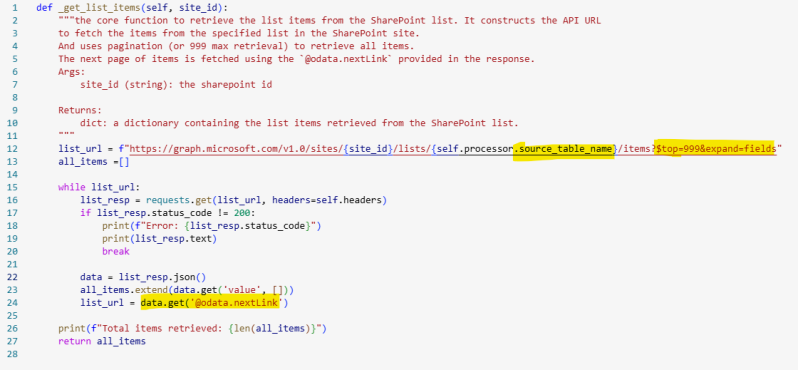

In this example, we select the top 999 records, since the default is only 200. We also add pagination to ensure all records are retrieved, especially useful if your list contains a lot of entries. For that, we use the @odata.nextLink property.

Although you now have the data in a list, which you can store as a Pandas DataFrame, one issue is that the column names aren’t the same as in your original SharePoint list. Instead, you’ll see generic names like title, name, field_{x}, etc., these are just default placeholders.

But the actual column names would be much more useful. You can retrieve them using the /columns endpoint:

columns_url =f"https://graph.microsoft.com/v1.0/sites/{site_id}/lists/{self.processor.source_table_name}/columns"

Once you’ve retrieved the display names of your columns via the API, you can easily rename them in your DataFrame.

Now that the data is cleaned up, you can store it in a Delta table and display it in Fabric.

I've successfully retrieved the SharePoint lists using the Graph API and integrated the data into my Fabric environment. This setup makes it easy to schedule refreshes and build logic on top of the data, without the syncing issues I encountered with OneLake. Business users can maintain the lists just as easily as they would in Excel, which keeps things intuitive and user-friendly. But hey, it's still not perfect.

Let’s see what Microsoft has in store for us in the future and find out how we can take this even further! 🚀